Building a nervous system for OpenStack

Guest

on 31 May 2016

Tags: deep learning , Juju , machine learning , OpenStack

Big Software is a new class of software composed of so many moving pieces that humans, by themselves, cannot design, deploy or operate them. OpenStack, Hadoop and container-based architectures are all byproducts of Big Software. The only way to address the complexity is with automatic, AI-powered analytics.

Summary

Canonical and Skymind are working together to help System Administrators operate large OpenStack instances. With the growth of cloud computing, the size of data has surpassed humans’ ability to cope with it. In particular, overwhelming amounts of data make it difficult to identify patterns; e.g. signals that precede server failure. Using deep learning, Skymind enables OpenStack to discover patterns automatically, predict server failure and take preventative actions.

Canonical story

Canonical, the company behind Ubuntu, was founded in March 2004 and launched its Linux distribution six months later. Shortly thereafter, Amazon created AWS, the first public cloud. Canonical worked to make Ubuntu the easiest option for AWS and later public cloud computing platforms.

In 2010, OpenStack was created as the open-source alternative to the public cloud. Quickly, the complexity of deploying and running OpenStack at cloud scale showed that traditional configuration management, which focuses on instances (i.e. machines, servers) rather than running micro-service architectures, was not the right approach. This was the beginning of what Canonical named the Era of Big Software.

Big Software is a class of software made up of so many moving pieces that humans cannot design, deploy and operate alone. It is meant to evoke big data, defined initially as data you cannot store on a single machine. OpenStack, Hadoop and container-based architectures are all big software.

The problem with Big Software

Day 1: Deployment

The first challenge of big software is to create a service model for successful deployment; that is, to find a way to support immediate and successful installations of that software. Canonical has created several tools to streamline this process. Those tools help map software to available resources:

- MAAS: Metal as a Service which is a provisioning API for bare metal servers

- Landscape: Policy and governance tool for large fleets of OS instances

- Juju: Service modeling software to model and deploy big software

Day 2: Operations

Big Software is hard to model and deploy and even harder to operate, which means day 2 operations also need a new approach.

Traditional monitoring and logging tools were designed for operators who only had to oversee data generated by fewer than 100 servers. They would find patterns manually, create SQL queries to catch harmful events, and receive notifications if they needed to act. When noSQL became available, this improved marginally, since queries would scale.

But that doesn’t solve the core problem today. With Big Software, there is so much data that a normal human cannot cope with and find patterns of behaviour that result in server failure.

AI and the future of Big Software

This is where AI comes in. Deep Learning is the future of those day 2 operations. Neural nets can learn from massive amounts of data to find almost any needle in almost any haystack. Those nets are a tool that vastly extends the power of traditional system admins; in a sense, transforming their role.

Initially, neural nets will be a tool to triage logs, surface interesting patterns and predict hardware failure. As humans react to these events and label data (by confirming the AI’s predictions), the power to make certain operational decisions will be given to the AI directly: e.g. scale this service in/out, kill this node, move these containers, etc.

Finally, as the AI learns, self-healing data centers will become standard. AI will eventually modify code to improve and remodel the infrastructure as it discovers better models adapted to the resources at hand.

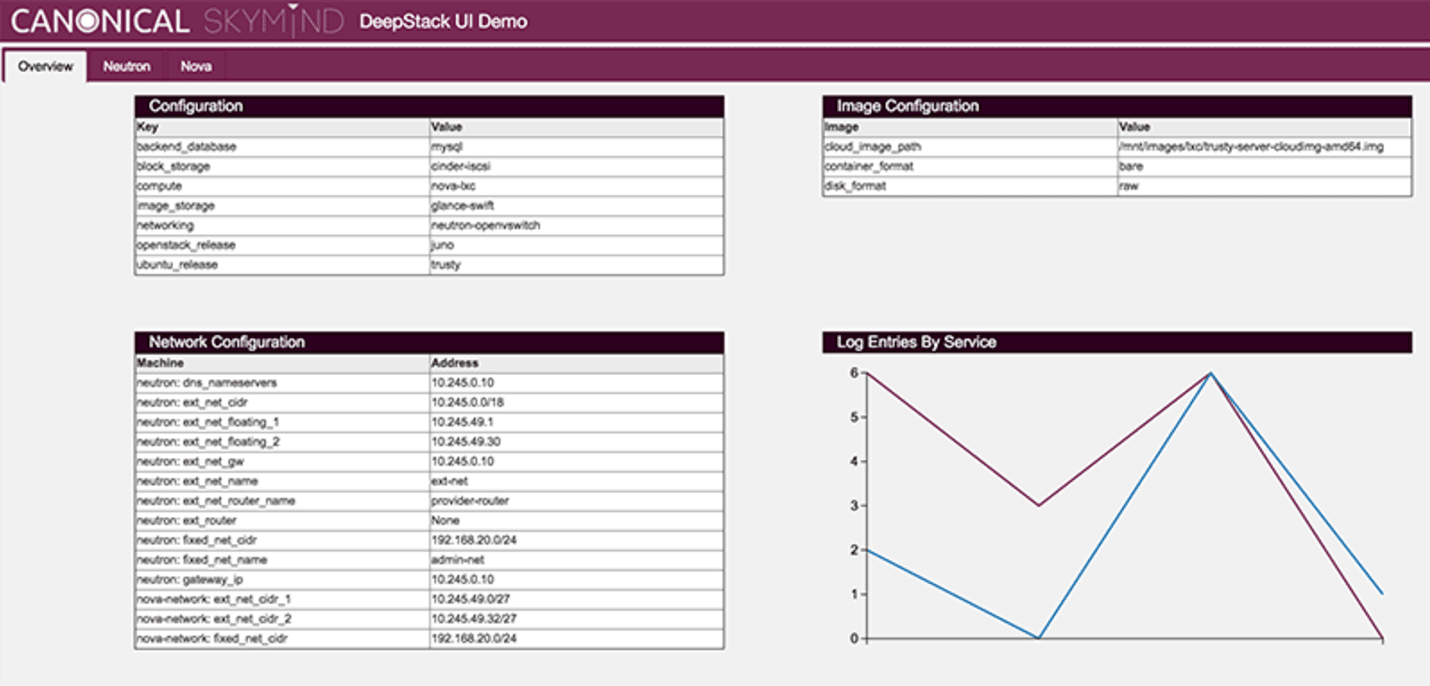

The first generation Deep Learning solution looks like this: HDFS + Mesos + Spark + DL4J + Spark Notebook. It’s an enablement model, so that anyone can do Deep Learning. But using Skymind on OpenStack is just the beginning.

Ultimately, Canonical wants every piece of software to be scrutinised and learnt in order to build the best architectures and operating tools.

Learn more

View the Original article to learn more about how Canonical and Skymind are working together to solve Deep Learning problems. Alternatively, you can get in touch with our team.

Skymind

Skymind provides scalable deep learning for industry. It is the commercial support arm of the open-source project Deeplearning4j, a versatile deep-learning framework written for the JVM. Skymind’s artificial neural nets can run on desktop, mobile, and massively parallel GPUs and CPUs in the cloud to analyze text, images, sound and time series data. A few use cases: facial recognition, image search, theme detection and augmented search in text, speech-to-text and CRM.

About the author

Chris Nicholson is the founder and CEO of Skymind. He spends his days helping enterprises build Deep Learning applications.

Smart operations, optimal architecture, better pricing.

OpenStack and Ubuntu bring automated deployment and management that help you optimize infrastructure costs — no matter your industry or use case.

Newsletter signup

Related posts

OpenStack cloud – happy 15th anniversary!

Happy birthday, OpenStack! It’s astonishing how fast time flies – fifteen years already. Yet, here we are: OpenStack cloud still stands as a de facto standard...

Join Canonical at the first-ever African OpenInfra Days

For the second time, and in less than one month, Canonical is coming to East Africa! Three weeks ago, we had the first-ever UbuCon Africa, which was...

OpenStack PoC? No problem!

Setting up a proof of concept (PoC) environment is often one of the first steps in any IT project. It helps organizations to get to grips with the technology,...