conjure-up Canonical Kubernetes under LXD today!

Adam Stokes

on 21 November 2016

Tags: Charmed Kubernetes , containers , docker , Juju , kubernetes , LXD

We’ve just added the Localhost (LXD) cloud type to the list of supported cloud type on which you can deploy The Canonical Distribution of Kubernetes.

What does this mean? Just like with our OpenStack offering you can now have Kubernetes deployed and running all on a single machine. All moving parts are confined inside their own LXD containers and managed by Juju.

It can be surprisingly time-consuming to get Kubernetes from zero to fully deployed. However, with conjure-up and the driving technology underneath, you can get straight Kubernetes on a single system with LXD, or a public cloud such as AWS, GCE, or Azure all in about 20 minutes.

Getting Started

First, we need to configure LXD to be able to host a large number of containers. To do this we need to update the kernel parameters for inotify.

On your system open up /etc/sysctl.conf *(as root) and add the following lines:

fs.inotify.max_user_instances = 1048576

fs.inotify.max_queued_events = 1048576

fs.inotify.max_user_watches = 1048576

vm.max_map_count = 262144 Note: This step may become unnecessary in the future

Next, apply those kernel parameters (you should see the above options echoed back out to you):

$ sudo sysctl -pNow you’re ready to install conjure-up and deploy Kubernetes.

$ sudo apt-add-repository ppa:juju/stable

$ sudo apt-add-repository ppa:conjure-up/next

$ sudo apt update

$ sudo apt install conjure-up

$ conjure-up kubernetesWalkthrough

We all like pictures so the next bit will be a screenshot tour of deploying Kubernetes with conjure-up.

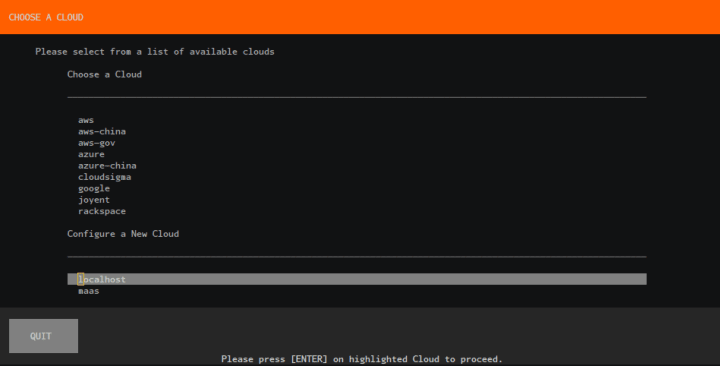

For this walkthrough we are going to create a new controller, select the localhost Cloud type:

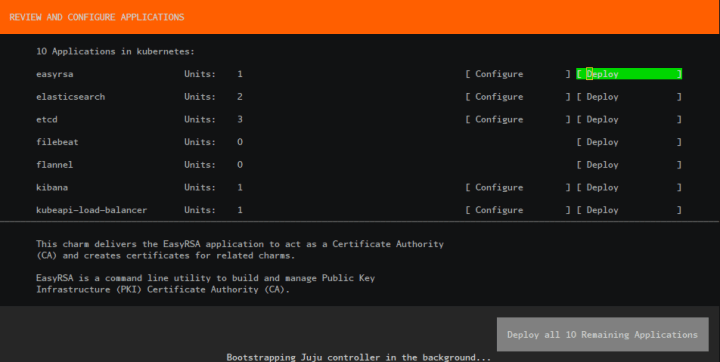

Deploy the applications:

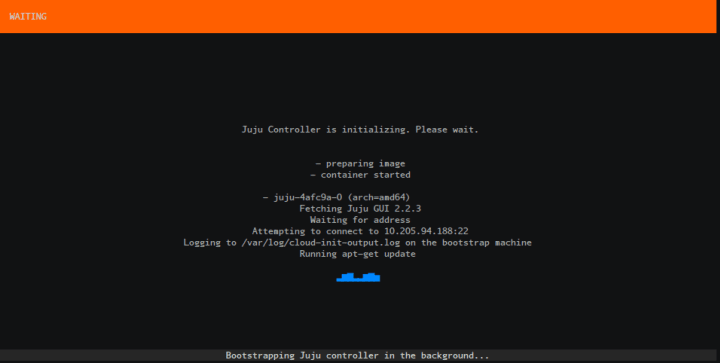

Wait for Juju bootstrap to finish:

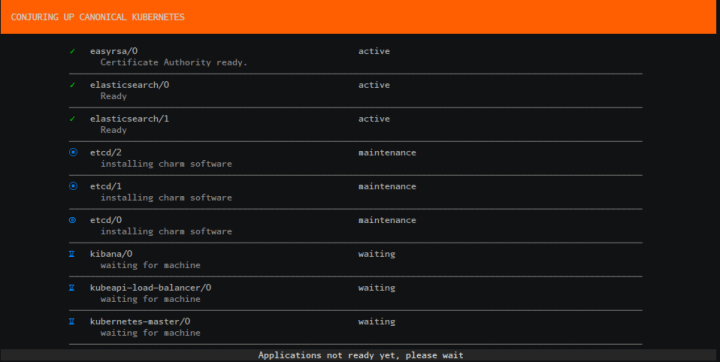

Wait for our Applications to be fully deployed:

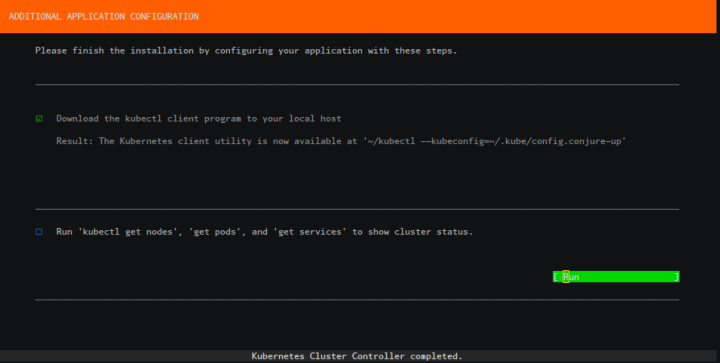

Run the final post processing steps to automatically configure your Kubernetes environment:

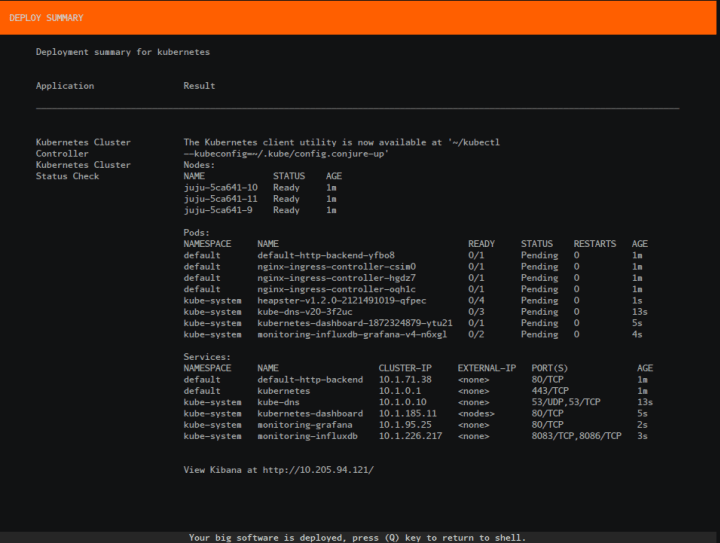

Review the final summary screen:

Accessing your Kubernetes

You can access your Kubernetes by running the following:

$ ~/kubectl --kubeconfig=~/.kube/configOr if you’ve already run this once it’ll create a new config file as shown in the summary screen.

$ ~/kubectl --kubeconfig=~/.kube/config.conjure-upOr take a look at the Kibana dashboard, visit http://ip.of.your.deployed.kibana.dashboard:

Deploy a Workload

As an example for users unfamiliar with Kubernetes, we packaged an action to both deploy an example and clean itself up.

To deploy 5 replicas of the microbot web application inside the Kubernetes cluster run the following command:

$ juju run-action kubernetes-worker/0 microbot replicas=5This action performs the following steps:

It creates a deployment titled ‘microbots’ comprised of 5 replicas defined during the run of the action. It also creates a service named ‘microbots’ which binds an ‘endpoint’, using all 5 of the ‘microbots’ pods.

Finally, it will create an ingress resource, which points at a xip.io domain to simulate a proper DNS service.

To see the result of this action run:

Action queued with id: e55d913c-453d-4b21-8967-44e5d54716a0

$ juju show-action-output e55d913c-453d-4b21-8967-44e5d54716a0

results:

address: microbot.10.205.94.34.xip.io

status: completed

timing:

completed: 2016-11-17 20:52:38 +0000 UTC

enqueued: 2016-11-17 20:52:35 +0000 UTC

started: 2016-11-17 20:52:37 +0000 UTCTake a look at your newly deployed workload on Kubernetes!

Mother will be so proud.

This covers just a small portion of The Canonical Distribution of Kubernetes, please grab it from the charmstore.

Fast, dense, and secure container and VM management at any scale

LXD brings flexible resource limits, advanced snapshot and networking support, and better security — all making for easier, leaner and more robust containerised solutions and VMs.