Ceph storage on VMware

Alex Chalkias

on 25 June 2020

Tags: ceph , comparison , SAN , Storage , VMware

If you were thinking that nothing will change in your VMware data centre in the following years, think again. Data centre storage is experiencing a paradigm shift. Software-defined storage solutions, such as Ceph, bring flexibility and reduce operational costs. As a result, Ceph storage on VMware has the potential to revolutionise VMware clusters where SAN (Storage Area Network) was the incumbent for many years.

SAN is the default storage for VMware in most people’s minds, but Ceph is gaining momentum. In this blog, we will do a high-level comparison of SAN and Ceph to highlight how Ceph storage on VMware makes sense as the traditional data centre is moving towards a world of low operating costs through automation that leaves space for more R&D and innovation.

Introduction to SAN and Ceph

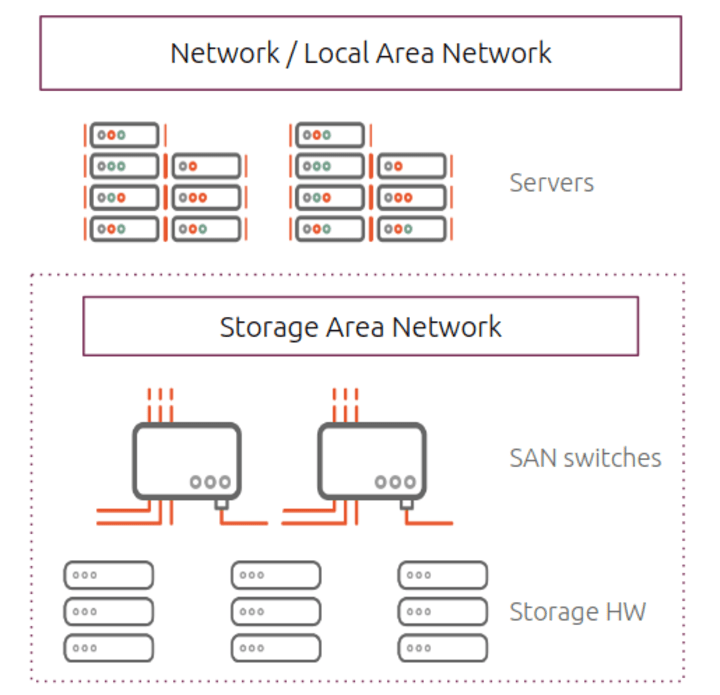

SAN arrays were introduced to solve block-level data sharing in enterprises. They use high-speed fibre channels and the iSCSI protocol to expose block devices over a network. SAN runs on expensive storage servers, requires one of ethernet and fibre channel network interface cards and can be complex to maintain. On the upside, SAN has well-renowned performance and allows data durability through RAID configuration.

Ceph is an open source project that provides block, file and object storage through a cluster of commodity hardware over a TCP/IP network. It allows companies to escape vendor lock-in without compromising on performance or features. Ceph ensures data durability through replication and allows users to define the number of data replicas that will be distributed across the cluster. Ceph has been a central offering by Canonical since its first version, Argonaut. Canonical’s Charmed Ceph wraps all Ceph components with an operational code – referred to as a charm – to abstract operational complexity, and drive lifecycle management automation.

SAN vs Ceph: Cost

A traditional SAN is usually expensive and leads to vendor lock-in. When it is time to scale, the SAN options are limited to buying more expensive hardware from the original vendor. Similarly, if vendor support reaches its end of life, an expensive migration may be needed. The cost of training, operations and maintenance of a SAN array is also significant.

Ceph provides software-defined storage allowing users to choose off-the-shelf hardware that matches their requirements and budget. The servers of a Ceph cluster do not need to be of the same type so when it is time to expand soa new breed of servers can be seamlessly integrated into the existing cluster. Moreover, when using Charmed Ceph, software maintenance costs are low. The charms are written by Ceph experts and encapsulate all tasks a cluster is likely to undergo. For example, expanding or contracting the cluster replacing disks, adding an object store or an iSCSI gateway.

SAN vs Ceph: Performance

In the legacy data centre, SAN arrays were prominently used for their performance as storage back-ends for databases. In the modern world, where big data and unstructured data brought new requirements, people have shifted from SAN’s high-cost performance to lower-cost, smarter approaches based on file and object storage strategies.

Ceph clients calculate where the data they require is located rather than having to perform a look-up in a central location. This removes a traditional bottleneck in storage systems where a metadata lookup in a central service is required. This allows a Ceph cluster to be expanded without any loss in performance.

Ceph features that make the difference

The trend of SAN as the go-to solution for virtualisation infrastructure is gradually leaving its place to software-defined solutions. These are proven to be cheaper, faster, more scalable and easier to maintain. Ceph provides the enterprise features that are now widely relied upon. It allows scaling the cluster up and down on-demand, caching the data, applying policies on the disks and a lot more.

Ceph block devices are thin-provisioned, resizable and striped by default. Ceph provides copy-on-write and copy-on-read snapshot support. Volumes can be replicated across geographic regions. Storage can be presented in multiple ways: RBD, iSCSI, filesystem and object, all from the same cluster.

With Ceph, users can set up caching tiers to optimise I/O for a subset of their data. Storage can also be tiered. For example, large slow drives can be assigned to one pool for archive data while a fast SSD pool may be set up for frequently accessed hot data.

With regards to integration with modern infrastructure, Ceph has been a core component of OpenStack from its early days. Ceph can back the image store, the block device service and the object storage in an OpenStack cluster. Ceph also integrates with Kubernetes and containers.

The Ceph charms provide reusable code for the integration and operations automation for both Openstack and Kubernetes. For example, the charms make it easy to provide durable storage devices from the Ceph cluster to the containers.

Why should you consider Ceph as a VMware storage backend?

SAN has been traditionally considered as the backend for VMware infrastructure. When designing their data centre, enterprises have to do complex TCO calculations for both their compute and storage virtual infrastructure that have to include performance, placement and cost considerations. Software-defined storage can help eliminate some of that complexity, leveraging the aforementioned benefits.

Charmed Ceph has recently introduced support for the iSCSI gateway that can be deployed alongside the Ceph monitors and OSDs, and provide Ceph storage straight to VMware ESXi hosts. The ESXi hosts have had support for datastores backed by iSCSI for some time so adding iSCSI volumes provided by Ceph is straightforward. The iSCSI gateway even provides actions to set up the iSCSI targets on behalf of the end-user. Once the datastore has been created, virtual machines (VMs) can be created within VMware backed by the Ceph cluster.

One would ask why not use the VMware specific software-defined storage in this case. Ceph’s ability to provide a central place for all of a company’s data is really the key differentiator here. Rather than having a dedicated VMware storage solution and another storage solution for object storage, Ceph provides everything under a single solution. Compared to both SAN and VMware storage costs, Ceph is cost-effective at scale, without compromising on features or performance of SAN.

Learn more about Charmed Ceph or contact us about your datacenter storage needs.

What is Ceph?

Ceph is a software-defined storage (SDS) solution designed to address the object, block, and file storage needs of both small and large data centres.

It’s an optimised and easy-to-integrate solution for companies adopting open source as the new norm for high-growth block storage, object stores and data lakes.

How to optimise your cloud storage costs

Cloud storage is amazing, it’s on demand, click click ready to go, but is it the most cost effective approach for large, predictable data sets?

In our white paper learn how to understand the true costs of storing data in a public cloud, and how open source Ceph can provide a cost effective alternative!

Interested in running Ubuntu in your organisation? Talk to us today

A guide to software-defined storage for enterprises

Ceph is a software-defined storage (SDS) solution designed to address the object, block, and file storage needs of both small and large data centres.

In our whitepaper explore how Ceph can replace proprietary storage systems in the enterprise.

Interested in running Ubuntu in your organisation? Talk to us today

Performant, reliable and cost-effective cloud scaling with Ceph

Canonical Ceph simplifies the entire management lifecycle of deployment, configuration, and operation of a Ceph cluster, no matter its size or complexity. Install, monitor, and scale cloud storage with extensive interoperability.