From lightweight to featherweight: MicroK8s memory optimisation

Alex Chalkias

on 14 April 2021

Tags: kubernetes , memory , MicroK8s , nvidia , optimisation , resources

If you’re a developer, a DevOps engineer or just a person fascinated by the unprecedented growth of Kubernetes, you’ve probably scratched your head about how to get started. MicroK8s is the simplest way to do so. Canonical’s lightweight Kubernetes distribution started back in 2018 as a quick and simple way for people to consume K8s services and essential tools. In a little over two years, it has matured into a robust tool favoured by developers for efficient workflows, as well as delivering production-grade features for companies building Kubernetes edge and IoT production environments. Optimising Kubernetes for these use cases requires, among other things, some problem-solving around memory consumption for affordable devices of small form factors.

Optimised MicroK8s footprint

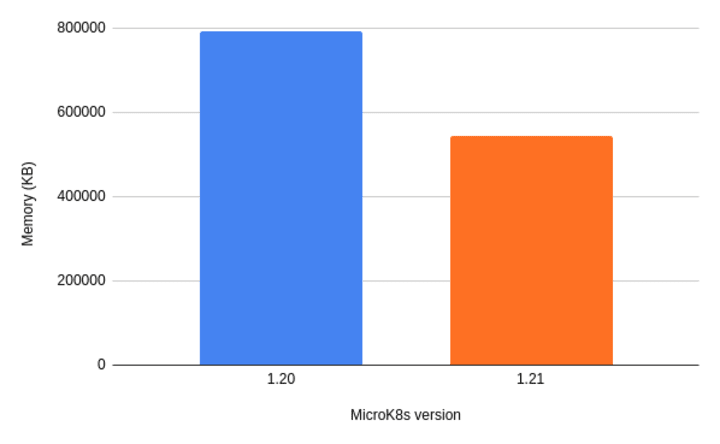

As of the MicroK8s 1.21 release, the memory footprint was reduced by an astounding 32.5%, as benchmarked against single node and multi-node deployments. This improvement was one of the most popular requests from the community, looking to build clusters using hardware such as the Raspberry Pi or the NVIDIA Jetson. Canonical is committed to pushing that optimisation further while keeping MicroK8s fully compatible with the upstream Kubernetes releases. We welcome feedback from the community as Kubernetes for the edge evolves into more concrete uses cases and drives even more business requirements.

How MicroK8s shed 260MB of memory

If you’re asking yourself how MicroK8s dropped from lightweight to featherweight, let us explain. The previous versions either simply packaged all Kubernetes upstream binaries as they were or compiled them in a snap. That package was 218MB and deployed a full Kubernetes of 800MB. With MicroK8s 1.21, the upstream binaries were compiled into a single binary prior to the packaging. That made for a lighter package – 192MB – and most importantly a Kubernetes of 540MB. In turn, this allows users to run MicroK8s on devices with less than 1Gb of memory and still leave room for multiple container deployments, needed in use cases such as three-tier website hosting or AI/ML model serving.

Working with MicroK8s on NVIDIA

As MicroK8s supports both x86 and ARM architectures, its reduced footprint makes it ideal for devices as small as the 2Gb ARM-based Jetson Nano and opens the door to even more use cases. For x86 devices, we are particularly excited to work with NVIDIA to offer seamless integration of MicroK8s with the latest GPU Operator, as announced last week. MicroK8s can consume a GPU or even a Multi-instance GPU (MIG) using a single command and is fully compatible with more specialised NVIDIA hardware, such as the DGX and EGX.

Possible future memory improvements

Hopefully, this is the first of many milestones for memory optimisation in MicroK8s. The MicroK8s team commits to continue benchmarking Kubernetes on different clouds – focusing specifically on edge/micro-clouds – and putting it to the test for performance and scalability. A few ideas for further enhancements we are looking into include combining the containerd runtime binary with the K8s services binary and compiling the K8s shared libraries into the same package. This way, the MicroK8s package memory consumption and build times will decrease even further, while MicroK8s will remain fully upstream compatible.

If you want to learn more you can visit the MicroK8s website, or reach out to the team on Slack to discuss your specific use cases.

Latest MicroK8s resources

- Portainer recommends MicroK8s – blog

- Secure container orchestration at the edge – webinar

- MicroK8s with Windows containers – blog

- Secure container orchestration at the edge – webinar

- Homelab: Cloud-native adblocking on Raspberry Pi – webinar

- How to build CI/CD pipelines with MicroK8s and Gitlab – tutorial

What is Kubernetes?

Kubernetes, or K8s for short, is an open source platform pioneered by Google, which started as a simple container orchestration tool but has grown into a platform for deploying, monitoring and managing apps and services across clouds.

Give your platform the deep integration it needs

Canonical Kubernetes optimises your systems for any cloud, on a per-cloud basis. Maximise performance and deliver security and updates across your whole cloud. Per-cloud optimisations for performance, boot speed, and drivers on all major public clouds Out-of-the-box cloud integration with the option of enterprise-grade commercial support.